Index Coverage Report – Find and Fix Indexing Issues

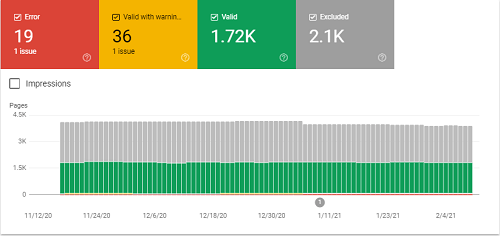

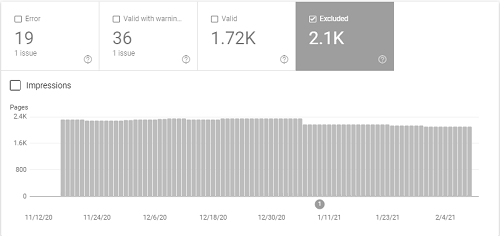

The Index Coverage report is an addition to the search console since it was updated in 2018. The report serves to show the state of your website’s URLs that Google crawled, visited, or tried to visit. It also shows you the pages that have been indexed and which have not.

The summary page has the results of all the URLs on your website. They are grouped in different statuses and the reasons for those statuses. Find out how you can get Google to index your pages using the index coverage report on the search console.

Index Coverage Report: Summary Page

Google will crawl your website using the primary crawler, and sometimes it will crawl a section of your pages using the secondary crawler. These crawlers will be your desktop or mobile website simulating a user’s experience on different devices. In this summary, you will see the increase in invalid indexed pages when your website grows.

As we have mentioned, on the summary page, there will be grouped statuses that are also sorted by reason. From the grouping, you should always start with the most impactful errors first.

To make the report simple, Google uses a color scheme to highlight the statuses. We will look at them in further detail further down.

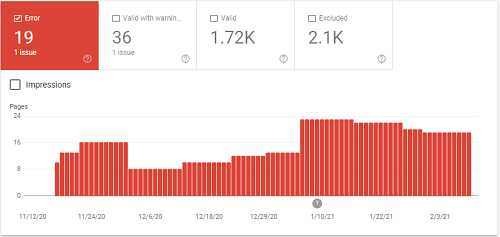

- Red: Error – Pages listed here are not indexed, and the reasons can vary as to why they are not indexed. These are the errors that you need to start by fixing first before moving on to other pages.

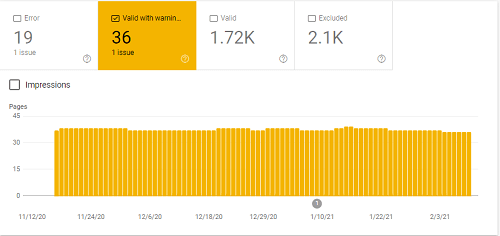

- Yellow: Valid with Warnings – Pages listed here are indexed, but they have an issue that you should know about.

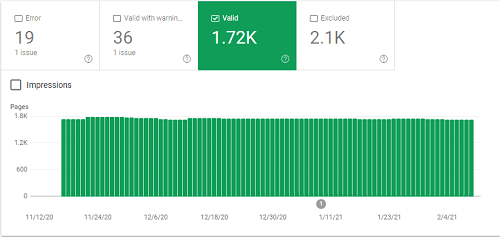

- Green: Valid – Pages listed here are all good and indexed by google.

- Grey: Excluded – the pages listed here are not indexed, and that is because you intended not to get them indexed. You might have used a non-index tag for the pages. Google can also exclude the pages because they might duplicate a canonical page that is indexed already.

Red: Errors

There are different reasons why your pages will be listed in this status. See below for the different errors you will find under this status.

Server error (5xx)

This error means that Google tried to crawl your page and returned with a 500-level error. It means when the server was aware that there was an error in attempting to crawl your page or incapable of performing the request. To fix server connectivity errors, you can do any of the following:

- Check that you did not unintentionally block Google

- You can control how your website is crawled by using robots.txt

- Check that your website’s hosting server is not down, misconfigured, or overloaded

- Try to reduce the excessive page loading from dynamic pages because they take too long to respond

Redirect Error

When Google crawled your page, it experienced a redirect error. You can check your URL using the debugging tool that will give you more details about the redirect. The redirect could be too long or exceed the max URL length; there was an empty or bad URL on the chain. Google suggests using debugging tools such as the lighthouse.

Submitted URL Blocked by Robots.txt

You could have submitted the page for indexing, but it is blocked by robots.txt on your website. The page is likely submitted from the XML sitemap, which means you will have to remove it from there. That is if you don’t want it to be indexed or crawled. You can also block the robots.txt file rule. You can also use the robots.txt tester to check the rule that is blocking your URL.

Submitted URL marked ‘noindex.’

The URL you submitted for indexing has a non-index directive from either the HTTP header or meta tag. You will have to remove the meta tag or the header to get it indexed.

Submitted URL seems to be a Soft 404

The submitted URL returned a 200-level code, or the page does not exist. There could also have thin content or has a redirect that is irrelevant to the URL.

If the pages don’t exist anymore and you have no replacement, it should return a 410-response code. You can customize the not found page for user experience. If the page has been moved, it should return a 301 response to redirect to the appropriate page. Should your page be incorrectly flagged, inspect the URL to check that Google renders all the content on the page.

Submitted URL Returns Unauthorized Request (401)

Google is unable to crawl your page because it might be password protected. You can fix this by removing the authorization or allowing the bots to access your page by verifying the page’s identity the page. Use the incognito mode to verify the error.

Submitted URL not found (404)

You have submitted a URL that does not exist for indexing. The URL could be coming from your XML sitemap, which means you will have to remove it from there. Even if it is not picked up from your XML sitemap, find where it is coming from and remove it from there.

Submitted URL returned 403

Your page has authorized access, and Google does not have the credentials. If Google indexes the page, allow anonymous visitors access or rather not submit the page for indexing.

Yellow: Valid with Warnings

Here are the reasons why pages will be listed under this status:

Indexed, Though Blocked by Robots.txt

Google managed to index your page even though robots blocked it on your web site. Robots do not prevent a page from being indexed, especially if someone links to your page. You could have blocked the page unintentionally, and if you don’t want it, unblock the page and update the robots file for your website. If you want the page to be blocked, then the robots.txt is not the right way to deal with the page. Rather use the non-index tag.

Indexed without Content

Google managed to index your page but could not read the content on the page. Check that your page is not in a format that Google can’t index. The page could also be cloaked to Google, which means different URLs or content is presented to search engines and users. This is a violation of Google’s webmaster guidelines.

Index Coverage Report Green: Valid

Here are the reasons why your pages are considered valid by Google:

Submitted and indexed: You submitted your URLs on the sitemaps for indexing the Google indexed them with no issues.

Indexed, not Submitted on Sitemap – Google discovered your pages and indexed them but are not found on the sitemap. Google recommends having all the important pages on a sitemap.

Grey: Excluded

Here are the reasons why Google may exclude your pages:

Excluded by the ‘noindex’ tag: You added a noindex tag to the page, and Google found it when it attempted to crawl the page. However, if the noindex tag was unintentionally added, you can remove it and submit the page for indexing.

Blocked by Page Removal Tool: Someone may have used a URL removal tool for the page, and this can block the page from being indexed. The URL removal tool is temporary and will only last 90 days. The page can get indexed after that 90 days if the bots come back and crawl the page.

Blocked by Robots.txt: The page is blocked by the robots file on your website, and you can use the robots.txt tester to verify it. Google can index such as page if it gets information about it without crawling it. However, that is not common. If you don’t want the page to be indexed, use the noindex tag.

Crawled, Currently Not Indexed – Google crawled the page but did not index it. However, it might get indexed, so you should not resubmit the page for crawling.

Discovered: Currently Not Indexed – Your page was found but Google has not been crawled yet. Google did not crawl the page because it would overload your website, but it has rescheduled the crawl for another time.

More Excluded Issues

Not Found (404) – Google discovered the page, but it returned a 404 error when it was requested. The URL may have been discovered from another site as a link, or it was there on your website and has been deleted. If you have moved the page, it is better to use a 301 redirect.

Alternate Page with Proper Canonical Tag – Google processed the page’s canonical tag and found that it points to the right page. There is nothing you can do about this issue.

Duplicate Without User-Selected Canonical – Google sees the page as duplicate content, but it does not have a distinct canonical. Google has decided that the page is not the canonical one and has left it out from indexing. To avoid this problem, you need to mark pages explicitly with the correct canonical.

Duplicate, Google Choose Different Canonical than User – You have set the page as canonical, but Google thinks a different page is a better canonical. Google will also index the page that it thinks is the better canonical. To fix this issue, check the URL that Google is selected as better, and if you agree, then change it.

Page with Redirect: Your 301 redirect was crawled by Google successfully, and the destination URL was added to the redirect queue removing the original one.

Soft 404 – similar to the 404, you would get it as an error when the bots find no page on your site matching the URL. It means the submitted URL returned a 200-level code or the page does not exist. To fix the issue, the page has to return a 410-response if it doesn’t exist anymore. If the page has moved, create a 301 response.

Duplicate, Submitted URL not Selected as Canonical – similar to the duplicate without user-selected canonical. Still, the difference is that you submitted the page for indexing by adding it to the XML sitemap. You can fix the issue by clearly marking the page with the correct canonical.

Blocked Due to Access Forbidden – you provided credentials for the page, but access was still not granted, and because the Googlebot never provides credentials, your server returns this error incorrectly. You can either block the page with robots.txt or a noindex tag.

SEO Impacting Issues

Out of the issues that you will find from the index coverage report, SEO impacts issues that should take priority. These are:

- Discovered, currently not indexed

- Crawled, currently not indexed

- Duplicate without user-selected canonical

- Duplicate, submitted URL not selected as canonical

- Submitted URL not found (404)

- Redirect error

- Indexed, though blocked by robots.txt

- Duplicate, Google chose different canonical than user

Conclusion: Index Coverage Report

After analyzing the index coverage report, you can start improving your website so that the Googlebot can crawl pages that give you value instead of worthless pages. Use the robots.txt for crawling efficiency and remove URLs worthless when possible or use canonical or noindex tag to avoid duplicate content.